Decoding Loss Functions: Categorical Cross Entropy vs Sparse Categorical Cross Entropy

Categorical Cross Entropy and Sparse categorical Cross Entropy are loss functions used for classification tasks. The choice depends on the format of the label data.

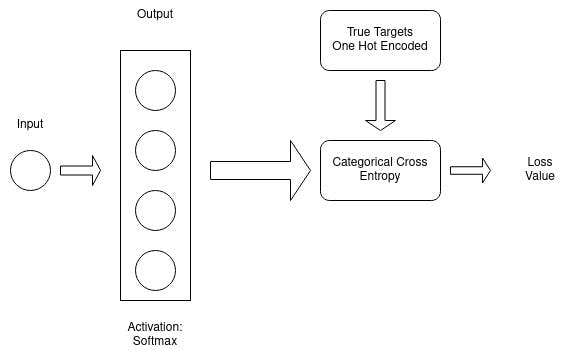

If the labels are one-hot encoded, categorical cross entropy is used.

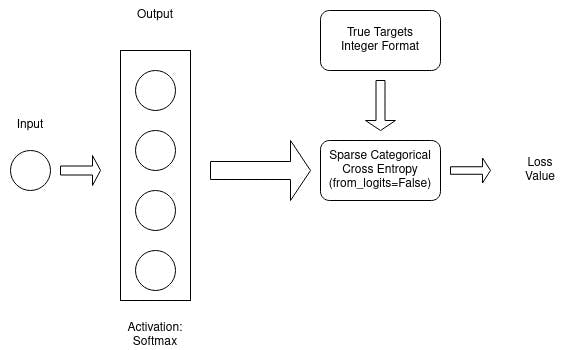

If the labels are integers, sparse categorical cross entropy is used. There is a small caveat in how this function is used. If you check the documentation, there is an argument from_logits, we will how that works later in this post.

Let's see them in action.

Data Preparation

input_x = np.array([[10], [7], [8], [9]])

labels = list(range(4)) # [0, 1, 2, 3] # For Sparse Categorical Cross Entropy

labels_one_hot = tf.one_hot(labels, len(labels)) # For Categorical Cross Entropy

# [[1., 0., 0., 0.],

# [0., 1., 0., 0.],

# [0., 0., 1., 0.],

# [0., 0., 0., 1.]]

Model with Softmax Activation

I will create a model with one input and one output layer, where the output layer will have four nodes with a softmax activation. Additionally the model will be initialized weights and biases with ones for consistency in reproducing the loss value.

model_with_softmax = Sequential()

model_with_softmax.add(Dense(units=4, kernel_initializer=tf.ones, bias_initializer=tf.ones, activation='softmax', input_shape=(1, )))

model_with_softmax.summary()

Now let's get the output from the model and calculate the loss value using categorical cross entropy.

output_with_softmax = model_with_softmax(input_x)

print(output_with_softmax) # tf.Tensor(

#[[0.25 0.25 0.25 0.25]

#[0.25 0.25 0.25 0.25]

#[0.25 0.25 0.25 0.25]

#[0.25 0.25 0.25 0.25]], shape=(4, 4), dtype=float32)

If you know how softmax function works, the output should make sense to you.

Categorical Cross Entropy

categorical_error = CategoricalCrossentropy()

categorical_error(labels_one_hot, output_with_softmax).numpy()

#Output : 1.3862944>

We pass one hot encoded target and output from the model to calculate the loss value.

Sparse Categorical Cross Entropy

Now we will calculate the loss from Sparse Categorical Cross Entropy and see if the loss value matches with that of Categorical Cross Entropy.

We pass the targets in the form of integer and output from the model to calculate the loss.

sparse_error_with_softmax = SparseCategoricalCrossentropy(from_logits=False)

sparse_error_with_softmax(labels, output_with_softmax).numpy()

# Output : 1.3862944

Note that we used from_logits=False. Why is that?

If you check the model, you will see that we used softmax activation in the output layer. Passing from_logits=False indicates to Sparse Categorical Crossentropy that the softmax has already applied in the model and it only needs to calculate the loss value.

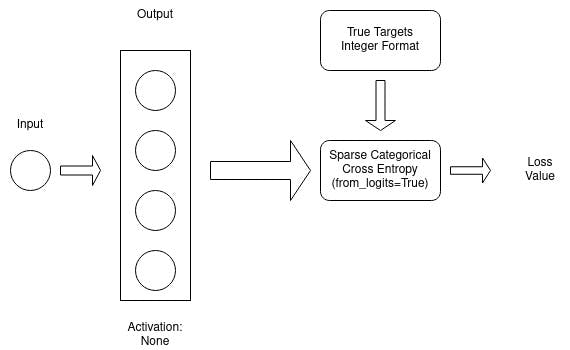

So how would we calculate the loss if we wanted to pass from_logits=True? Also what kind of change would we see in the model?

Model without Softmax

In this case, the model will not have a softmax activation at the output layer.

model_without_softmax = Sequential()

model_without_softmax.add(Dense(units=4, kernel_initializer=tf.ones, bias_initializer=tf.ones, input_shape=(1, )))

Note that the weights and biases have been initialized with ones. Next let's calculate the loss value.

output_without_softmax = model_without_softmax(input_x)

sparse_error_without_softmax = SparseCategoricalCrossentropy(from_logits=True)

sparse_error_without_softmax(labels, output_without_softmax).numpy()

# Output : 1.3862944

The loss value is same as before.

Note that we passed from_logits=True in this case. This tells the sparse categorical cross entropy that it needs to apply softmax function on the model prediction and then calculate the loss value.

Summary and Key Takeaways

Categorical Cross Entropy vs. Sparse Categorical Cross Entropy:

Categorical Cross Entropy is used for targets which are one-hot encoded.

Sparse Categorical Cross Entropy is used for targets which are in integer formats.

Uniform Loss Values:

- The loss values were same when calculated with Categorical Cross Entropy and Sparse Categorical Cross Entropy.

Impact of from_logits Parameter:

- When exploring Sparse Categorical Cross Entropy, we examined the

from_logitsparameter. Remarkably, whether set toTrueorFalse, the loss values remained consistent. This signifies that the choice of this parameter did not alter the calculated loss.

| Target Type | Activation | Loss Function | Parameter |

| One hot Encoded | Softmax | Categorical Cross Entropy | NA |

| Integer | Softmax | Sparse Categorical Cross Entropy | from_logits=False |

| Integer | None | Sparse Categorical Cross Entropy | from_logits=True |